Imprecise Computers

How do we trust something we can't verify?

Welcome to Fully Distributed, a newsletter about AI, crypto, and other cutting edge technology. Join our growing community by subscribing here:

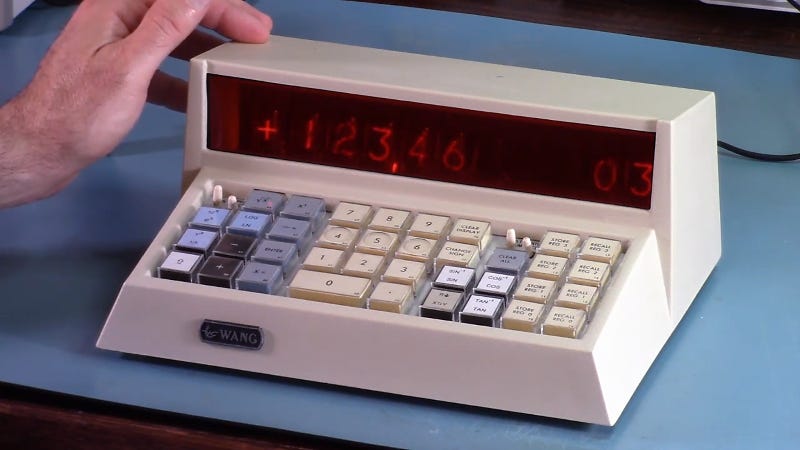

Why do humans blindly trust their calculators?

For the most part, it’s because calculators produce consistent and verifiable results. They are “deterministic” - they always produce the same output for a given set of input conditions or parameters without any element of randomness or uncertainty. This approach to rules-based algorithms extended beyond simple calculators - until the 1990s most of our software relied on such deterministic models. This consistency and predictability has conditioned humans to largely trust their computers.

However, the “real world” is highly complex and variable - it is simply impossible for humans to teach machines all of the world’s cause-and-effect relationships. Fortunately, we found out a way to get machines to learn about the world on their own - a field that’s now known as 'machine learning’. In simple terms, computers can now ingest large swaths of unstructured data and learn all of the relationships, patterns, and trends within it on their own. Unlike a simple calculator, these AI systems rely on “probabilistic” models that produce the “most likely” answer for a given input, but not necessarily the “right” one. For the first time, computers became imprecise.

Since then, imprecise AI systems have grown in scale, complexity and adoption, permeating virtually every part of our daily lives. AI tells us what to watch (Netflix, YouTube, TikTok), what to buy (Amazon, Shein), where to eat (Google Maps) and even who to date (Hinge, Tinder). We got so comfortable relying on AI largely because customer value proposition has been so incredibly high that we simply did not question how these products work and why. Besides, the AI tech has been abstracted away from the end user such that most of us don’t even realize that we are interacting with AI on a daily basis.

More recently, the overnight success of ChatGPT has earned AI an unprecedented consumer mind share. For the first time people were overtly aware of the technology they were using, turning AI into a popular dinner conversation topic. However, recent incidents of ChatGPT providing harmful or incorrect answers have raised debates about AI safety and its role in our society.

So how much should we really be trusting our imprecise machines?

Explainability problem

Since the 1990s imprecise AI systems have grown considerably in both scale and technical complexity, turning these probabilistic models into giant black boxes. It is now incredibly difficult to evaluate or understand how a model arrives at its conclusions, a phenomenon referred to as “explainability problem”. Unlike human reasoning, AI cannot ‘explain’ what it has learned, why, or how. It cannot ‘reflect’ on its discoveries or check its errors. We can only observe the results, but not the process how to get there. This is strikingly different from human reasoning which can be paused, inspected, and repeated by other humans.

Increasingly complex and sophisticated AI systems can shape our social and commercial activity in ways that are not clearly understood today. This makes explainability perhaps one of the biggest challenges facing our society.

Consider whether you would blindly trust an AI’s recommendation that can’t be easily audited or verified in these hypothetical situations:

A medical AI assistant suggests to administer a highly unconventional procedure or treatment for a patient in critical condition

A credit underwriting AI agent approves a white applicant’s loan request over a seemingly similar loan request from a black applicant

A social network’s AI moderator flags and suppresses content due to ‘likely misinformation’ that happens to be disproportionately right-leaning

A military AI strategist recommends sacrificing a few hundred civilian lives in a given battle order to win the entire war

A hedge fund’s AI system makes an obscure and risky $100M investment

An autonomous vehicle elects to hit a pedestrian to protect the driver’s life

While all of the above actions might be perfectly valid and appropriate, they also might not be. But on what basis should the AI’s decision be overridden if we cannot understand its calculations? Would an override ever be justified or should we implement the recommendations on faith alone? If not, are we undermining performance that is superior to ours? How do we know that the system’s objective is aligned with that of the user?

Development of procedures to assess whether an AI performs as expected is vital.

Building a safe AI future

Safe deployment of AI systems is a complex topic and an active topic of AI research. This section probably deserves an entire blog post of its own, but I will attempt to offer a simple overview here.

First, we need to develop robust ways to both build safer AI systems AND design frameworks to evaluate how safe or unsafe a given AI system is. This may include:

Rigorous testing / audit of training datasets for bias prior to deployment

Continuous model tuning based on human feedback post deployment (RLHF)

Continuous model tuning based on AI supervision post deployment (RLAIF systems such as Constitutional AI offer a more scalable alternative to RLHF)

Model evaluation and assessment for alignment issues / honesty / harm using mechanistic interpretability (reverse engineering model output), automated red-teaming (stress testing model), etc.

With that said, technological advancements are only part of the solution - we will also need some form of government involvement to get this right. It is possible that governments may choose to mandate policies that are similar to how they regulate a lot of human professional activity:

Regular testing requirements to maintain a “license” to operate

Compliance monitoring requirements

Formation of an oversight and audit program

Transparency around model training / dataset engineering

It will be critical for the government to strike the right balance of providing guardrails without stifling domestic innovation. Each country’s approach to dealing with AI technologies will have a tremendous impact on their relative competitiveness in the global arena. Those societies that choose to suppress its adoption through draconian regulation will inevitably fall behind those societies that embrace it.

Closing thoughts

I expect continued advances in AI (and its adoption) to fundamentally alter our reality at a scale comparable to that of industrial revolution. Things that previously seemed impossible will become possible. What took years may take only a few minutes. Human-machine collaboration will become ubiquitous. However, as AI’s role in defining and shaping our society’s information space grows exponentially, it becomes that much more important to develop robust systems and frameworks to monitor and regulate it. To get things right will likely require a joint effort from private enterprise, academia, and government actors.

I leave you with a few questions that I am currently pondering about:

What happens when AI produces insights that are true but beyond the frontiers of current human understanding?

Who will set the alignment objectives for imprecise systems with unexplainable logic and unavoidable consequences? Big Tech, government, AI itself?

How do we solve for competing and/or incompatible objectives/ incentives of all stakeholders involved? (government, private sector, consumers)

What is the appropriate role of government in shaping AI policies? How do you keep their powers in check and avoid tyranny / oppression?

How do we avoid the formation of an extractive oligopoly of 2-3 AI enterprises?

Let me know what you think! DMs always open on Twitter @leveredvlad

If you enjoyed reading this, subscribe to my newsletter! I regularly write essays about AI, crypto, and other cutting-edge technology.